Ma vs Musk on the supremacy of machines over mankind

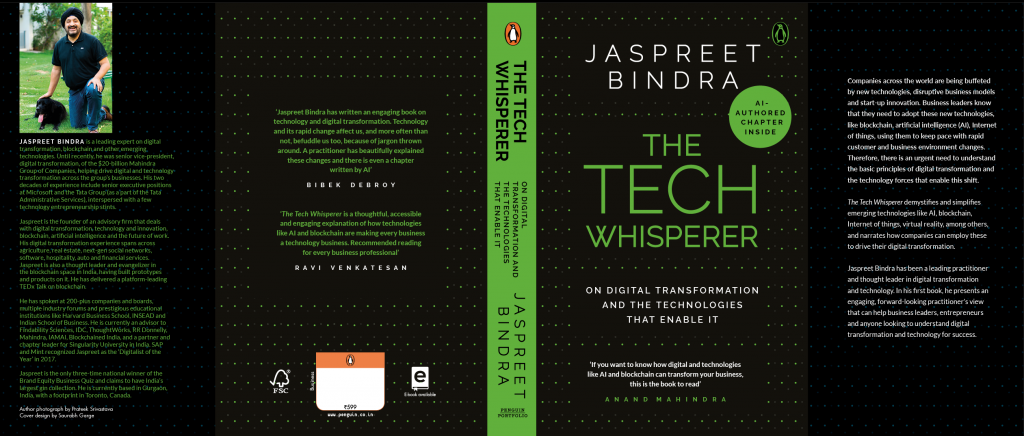

Jaspreet Bindra

AI is perhaps the hottest technology in the world right now, and certainly the most hotly debated. All of us are inundated daily on how it can either transform our lives for the better, or completely destroy us. Depending on which side of the debate you are, AI can either take all our drudgery or our jobs, become our pets or enslave us, save us from global warming or destroy us before that does.

It was a rambling discussion in Shanghai last week, that brought Artificial Intelligence into focus once again. In this case, it was the clearly jet-lagged technology legend Elon Musk, and a very composed Jack Ma, the largest purveyor of goods over the Internet. While the discussion rambled all over, from Pong to the Matrix to Mars, it was Musk shooting down Ma’s optimism by saying that these could be “famous last words”, that caught my attention. Musk has been consistently pessimistic about AI; in another famous joust, this time with Facebook’s Mark Zuckerberg, he tweeted that “his (Zuckerberg’s) understanding of the subject is pretty limited.”

In all this confusion, let us first try and determine what AI is. The world became aware of something like non-human intelligence when IBM’s Deep Blue defeated the world-champion Gary Kasparov in chess in 1997. This got reinforced when Watson, again from IBM, defeated two reigning world champions of the quiz-show Jeopardy on live television. As a breathless New York times wrote the following day, “From now on, if the answer is “the computer champion on “Jeopardy!” the question will be, “What is Watson?”. However, the last nail in the proverbial coffin of human superiority was dug, when Google-Deepmind’s Alpha Go comprehensively vanquished the all-time world champion in Go, a game infinitely more complex than chess and requiring very human talents to play it. This demonstration of Deep Learning, perhaps the most exciting field of AI, was what unnerved the academic and scientific world, besides reducing the (human) Go champion to tears.

So, what is AI? Gil Press, a respected journalist and researcher contends that AI’s history goes back ot 1308, when Catalan poet and theologian Ramon Llull perfected his method of using paper-based mechanical means to create new knowledge from a combination of concepts. Five hundred years later, emerged an 18th century hoax called the Mechanical Turk, an elaborate machine which could play chess with you and sometimes win, until people realised there was a human hidden inside it! (Amazon’s product Mechanical Turk draws its nomenclature inspiration from this, though that is another story). The true birth of AI was perhaps in the 1950s with Alan Turing and his eponymous Turing test which theoretically determined whether a machine was intelligent or not. Turing’s great work gave rise to Enigma, the legendary code-breaking machine of WW2, which played a pivotal role in the Allies defeating Germany.

It was John McCarthy of MIT, one of the Founding Fathers of AI, who coined the term ‘Artificial Intelligence’ and proceeded to define it in its chilling best: ‘a branch of computer science concerned with making computers behave like humans.’ AI, in fact, is not one technology, it is an overarching term that comprises several sub-technologies. Machine Learning (ML) is perhaps the most talked about, where a machine learns through extensive training how an apple is different from an orange, for example, but there is also Natural Language Processing or NLP, which is at the heart of Alexa. There is Machine Vision, giving rise to Image Recognition (IR), which helps it figure out how to spot cat videos on YouTube, and there is Robotics which is what fascinates us humans perhaps the most – R2D2, humanoids, Sophia and robotic soldiers. And, finally, there is Deep Learning – the latest offspring within AI, which defeated the Go champion.

So, will AI be a boon to humankind, or the harbinger of our destruction? Will it be ‘Our Final Invention’ as author James Barrat, famously argued or will it be a ‘gift for humanity’ as celebrated Chinese technologist Kai Fu Lee contends? I believe that we yet do not know the final answer. Every new technology that came in – computing, electricity, even motor cars – was supposed to destroy mankind as we knew it, and was proclaimed to be the End of the World. Humankind, however, managed to domesticate every new technology, and use it for quantum improvements to our lifestyles, incomes and prosperity. The AI optimists like Ma, Lee and Zuckerberg assert that it will be no different this time. Humans will adapt to AI, and use it even further improve our well-being – in jobs, for example, the tedious repetitive jobs will be taken by AI and the intelligent, caring, loving jobs will be done by humans. WEF predicts that AI will displace 75mn jobs by 2022 but will also create 133mn new ones. So, net positive.

Not true, say the naysayers like Musk, Gates and Gaiman: this time, it is different. AI is the first technology that has the ability to learn and think, and even out-think us. This ability is increasing exponentially, and soon it will outpace humans. In fact, Ray Kurzweil, the famous tech futurist has even put a date to it: 2045 will be when Singularity happens, where AI will become equal to or more powerful than human intelligence, when as per Wikipedia ‘technological growth becomes uncontrollable and irreversible, resulting in unfathomable changes to human civilization’.

I have always been a technology optimist, but in this case, I also lean a bit towards the Elon Musk camp. AI will surpass us eventually, and unwittingly and will be aided by humans in that effort. This is summed up in another, far more articulate quote by Musk, where he says: “AI doesn’t have to be evil to destroy humanity – if AI has a goal and humanity just happens in the way, it will destroy humanity as a matter of course without even thinking about it, no hard feelings.”

Yes. No hard feelings.